Enabling SWaP-optimized EW solutions through accurate FPGA power modeling

StoryJuly 18, 2019

Modern electronic warfare (EW) systems, especially those for use in harsh SWaP-constrained environments, must not only include high-performance processing elements, but also the technology to cool these high-power devices. These challenges are particularly relevant in the design of compact FPGA [field-programmable gate array] modules that are at the core of next-generation systems. In order to stay ahead of adversaries and ensure control of the electromagnetic spectrum (EMS), it is critical to leverage the latest device technology. However, each generation of new FPGA devices comes with higher processing density, which brings more thermal-management challenges.

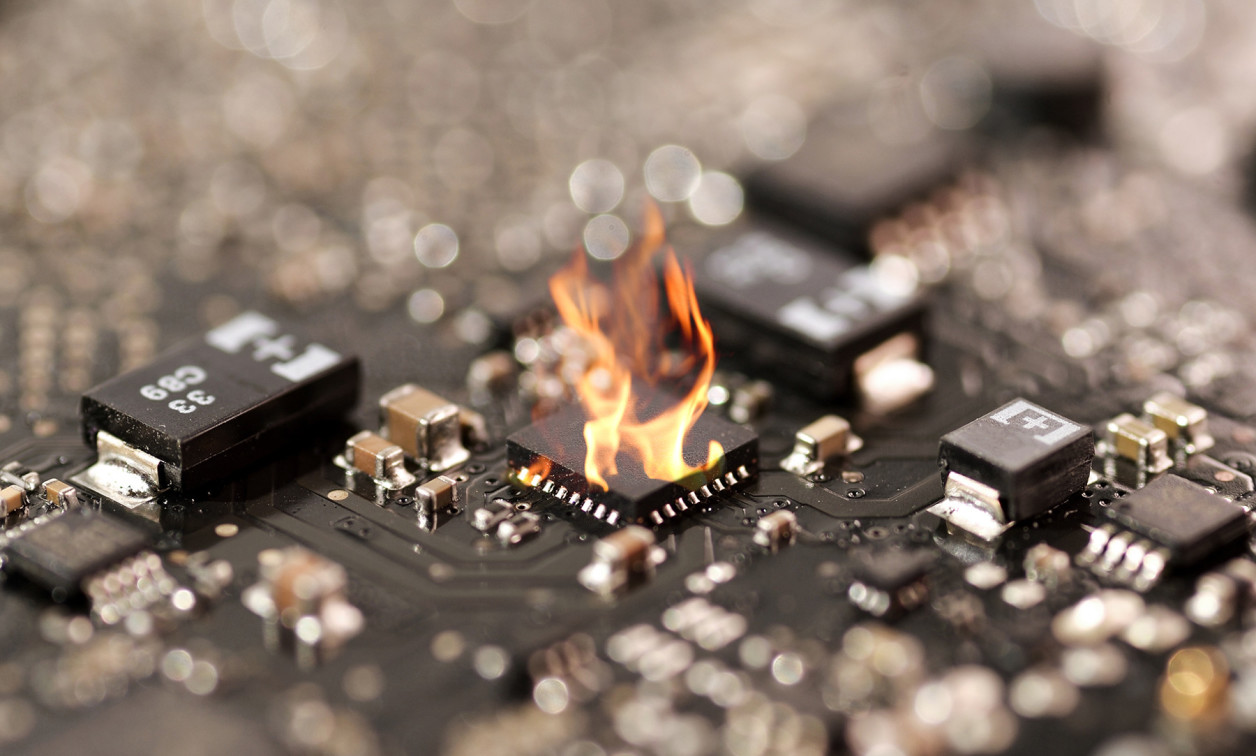

Historically, the advancements in FPGA technology have supported each new generation of high-performance electronic warfare (EW) system. However, in the last few years thermal management is emerging as the new limiting factor in FPGA performance. While modern FPGA devices have the size and speed to support processing-intensive EW and spectrum-management algorithms, they are unusable if the system fails to manage the heat generated by the devices. Additionally, since the power usage depends on the specific algorithm installed on the FPGA, it is possible that this limitation is not discovered until the FPGA module is integrated into the customer’s next higher assembly. (Figure 1.)

Figure 1 | A graphical representation of FPGA module temperature. Source: Mercury Computer.

|

|

|

|

|

|

The consequences of poor FPGA thermal management are often severe. For borderline cases, the mean-time-between-failure (MTBF) is reduced, increasing the likelihood of the module failing in the field. For more severe cases, the FPGA module simply will be unable to handle an intensive algorithm. Additionally, poor power modeling can lead to a power supply that is unable to provide sufficient current to the FPGA devices.

In order to develop effective and efficient thermal management hardware, as well as properly size the FPGA power supplies, it is critical to build an accurate power model for the FPGA devices. Since the power usage is dependent on the specific algorithm, this model must be tailored for specific applications. Additionally, in order to support design optimization, this model must allow for parameter sweeping.

Traditional FPGA power modeling

Standard design methodology uses the power model provided by the FPGA device manufacturer. However, in order to yield an accurate result, these models require detailed inputs that are not always known to the FPGA module designer. In order to deal with the lack of power modeling accuracy, the designer typically has two options.

The first option is to overengineer the power supply and cooling systems. Using the most conservative analysis, the designer can select a power supply that far exceeds the expected current draw of the devices. Additionally, increasing the physical size of the module and including extra thermal conduction pathways will enable the module to handle the power dissipation – even if the actual power levels vary from the rough model. While this approach will likely yield a product that meets the power specifications, it will be larger and more expensive than needed. Since most systems are limited in size, weight, and cost, an overengineered solution will likely present problems.

The second option is to perform multiple design iterations. In the first iteration the designer uses their best guesses for the size of the power supply and cooling architecture. After a prototype is built, the power is measured and a second iteration is designed to account for the actual levels. While also resulting in a product that meets the power specifications, this approach delays the schedule and increases the design cost. Additionally, in order for this approach to be successful, the FPGA module designer must have detailed knowledge of the specific end-users’ algorithm. Without an accurate representation of the algorithm, the FPGA module designer is unable to measure the actual power used by the prototype.

Another approach to FPGA power modeling

One approach to addressing these challenges is to divide the modeling process into different phases based on the stage of the design. With this approach, an early power prediction at the beginning of the development process provides the designer with the information for the power supply design as well as the mechanical cooling elements. Using the results of this early power prediction modeling, a prototype is built. After fabrication, the power is measured by installing validation IP – designed to realistically emulate customer algorithms – into the FPGA devices. The results of this testing are fed back into the model and used to improve the accuracy for future designs.

Implementation of the early power-prediction modeling

In order to apply the results of one modeling activity to a subsequent design, the early power-prediction tool must simplify the process of entering the various parameters into the model supplied by the FPGA device manufacturer. However, accuracy must not be sacrificed for the sake of efficiency. To balance these, the designer takes the power model as provided by the FPGA device manufacturer and adds automation to generate the various inputs. Separating the FPGA logic into groups based on function simplifies the process of defining the inputs to the model. (Figure 2.)

Figure 2 | Modeling divides FPGA in functional blocks.

|

|

|

|

|

|

Additionally, this approach benefits from a common architecture across multiple FPGA module designs, including a consistent but scalable control-plane infrastructure, proven data-plane infrastructure (interfaces, framers, and switches), and standard clocking and synchronization IP. The FPGA design blocks are partitioned based on their properties, such as sample data, sample processing, stream data, control plane, stream interfaces, and the like. By reusing this proven infrastructure and modeling design blocks according to their respective properties, the model accuracy is improved with each new design.

Since this approach uses automation to populate the manufacturer’s model, it enables rapid design automation through parameter sweeping. Additionally, it allows the user to optimize a model to converge on measured data in order to improve the accuracy of the inputs to the model for future designs.

Design validation

As previously mentioned, both modeling the FPGA power usage and validating the hardware requires a deep understanding of the customer algorithm. Since different applications vary in complexity and result in different bit-toggle rates, the power validation is specific to individual use cases. To achieve this flexibility, Mercury has developed the EchoCore Power Load IP, which enables more precise control of FPGA resource utilization.

The tool enables the user to compare and correlate the power modeling predictions with the empirical measurements, which helps refine the assumptions used as inputs to the model, thereby improving the accuracy of the power model for future FPGA designs. Validation of production hardware can also be performed prior to shipping to the end user, with this step reducing the likelihood of integration issues and failures in the field.

Managing heat going forward

As rival nations leverage readily available commercial technology to deploy advanced EW capabilities, maintaining control of the EMS necessitates the rapid and efficient development of new, high-performance systems that are optimized for SWaP-constrained environments. In order to ensure reliable operation, all phases of the design must incorporate accurate thermal modeling. These models must be accurate, flexible, and have the efficiency to support parameter sweeping. Additionally, these models must leverage a process to validate the hardware and correlate the empirical data to the modeled results. Since the actual power dissipation is dependent on the algorithm, this process must have the flexibility to be adjusted to represent multiple different applications.

Optimizing the power handling and understanding the design tradeoffs are critical to program success and requires a supplier with a high degree of technical understanding as well as the ability to effectively collaborate with the end user to solve the most difficult thermal-management and other challenges.

Mario LaMarche is product marketing manager for Mercury Systems. He previously worked at Teledyne Microwave Solutions and Samtec as product line manager and engineer. Mario holds MS and BS degrees in electrical engineering/RF and microwave design. Readers may reach Mario at mario.lamarche@mrcy.com.

Mercury Systems

www.mrcy.com