Common Weakness Enumeration (CWE) defines cybersecurity vulnerability landscape for mission-critical applications

StoryMarch 20, 2019

The Common Weakness Enumeration (CWE), a category system for software weakness and vulnerability, is now in use to provide a common vocabulary for source-code analysis tools for those developing mission-critical embedded systems. Some distinct groupings of CWE items – such as those that are associated with general coding practices – are focused on security-specific parts of a software system, including authentication and encryption, and those that can be mitigated through appropriate choice of programming languages or tools. A recent decision was made to include a “Common Quality Enumeration” within the framework of CWE that would generalize its applicability to all kinds of software weaknesses, not strictly those that relate to cybersecurity.

Laying out the CWE

The Common Weakness Enumeration (CWE, at http://cwe.mitre.org) has emerged as a de facto reference resource to every security-conscious developer of mission-critical embedded systems. The CWE is a categorization of all known cybersecurity vulnerabilities into a single, systematically numbered list, with the most recent version (version 3.2, from January 2019) containing just over 800 weaknesses, and almost 1,200 total items. The CWE grew out of a project by the MITRE Corporation (Bedford, Massachusetts and McLean, Virginia) to characterize and summarize the growing Common Vulnerabilities and Exposures (CVE) list; MITRE also maintains the CVE list in conjunction with the National Vulnerabilities Database (NVD) maintained by the National Institute of Standards and Technology (NIST).

The CVE and the NVD together log every publicly known cybersecurity vulnerability and exposure incident, going back to 1999. The CWE attempts to group the cybersecurity vulnerabilities and exposure incidents into distinct categories so as to provide a common vocabulary for tools, cybersecurity experts, and the overall mission-critical software development community. In version 3.2, an effort has been made to include more general quality weaknesses, which originated in the CQE (Common Quality Enumeration) project. These kinds of weaknesses do not directly relate to security issues, but can nevertheless cause major problems during the life cycle and can often be detected by the same kinds of tools that detect security-focused weaknesses.

The CWE is being used in various contexts, but perhaps the most important is in connection with source-code analysis tools. Historically, each such tool has had its own specific vocabulary, all of which had potentially confusing or ambiguous descriptions of precisely what sorts of software problems it is designed to detect. With the emergence of the CWE, however, many source-code analysis tools have begun to identify the problems they detect using the associated unique CWE identifier. Using the CWE, this shared vocabulary enables results from multiple tools to be combined and compared in a meaningful way. Even using a common terminology, there are advantages to being able to use multiple tools, because different tools tend to have different strengths when it comes to detecting potential source-code defects.

CWE examples

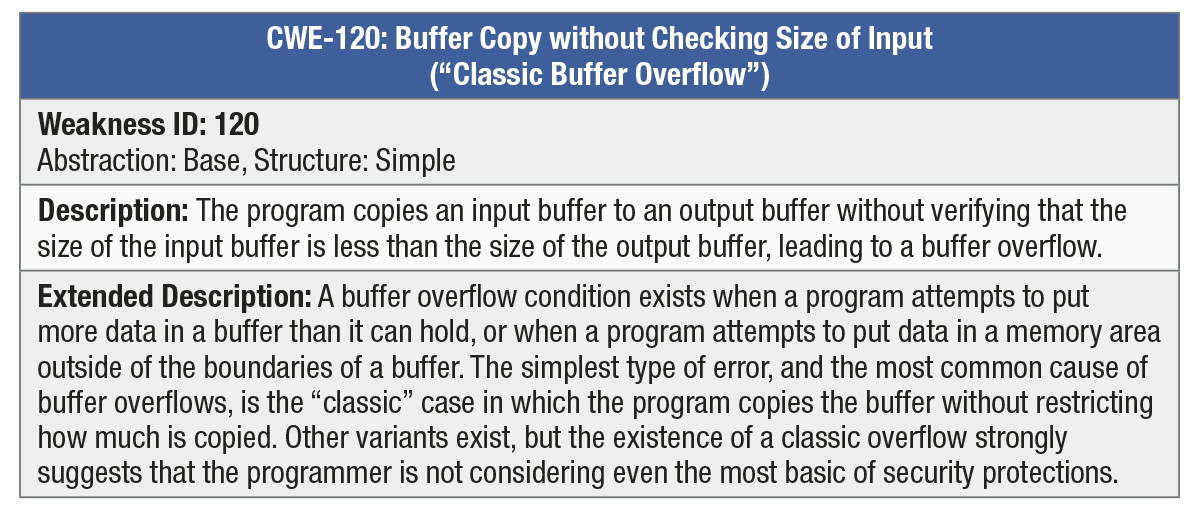

The CWE is best illustrated by examples. When first looking at the CWE, it is often helpful to distinguish different sorts of weaknesses. Some we could call universal in that they are of concern in any and every application, such as a buffer overflow (CWE-120). Essentially all programs work with tables or arrays or strings of characters, and for all such programs, attempting to put more into the table, array, or string than it can hold is certain to create problems, either immediately if the language catches such attempts with a run-time check, or eventually if the data that is corrupted by such a buffer overflow is used as input to some later phase of the computation. The entry on http://cwe.mitre.org for CWE-120 is as follows (Table 1).

Table 1: CWE-120.

|

|

|

|

(Click graphic to zoom) |

In addition to CWE-120, there is a set of related weaknesses that deal with indexing into an array-like structure outside the bounds of the array. A search on the CWE website for “array out of bounds” produces a list of relevant CWE entries, which include more than just the description of the weakness: They also include examples to illustrate the weakness in those programming languages where it is not detected automatically by compile-time or run-time checks. Furthermore, in the entry for some of the more fundamental CWEs (such as CWE-120), additional sections are provided, such as Modes Of Introduction, Applicable Platforms (typically the programming languages where the weakness is more prevalent), Common Consequences, Likelihood Of Exploit, and Potential Mitigations. The mitigations section will identify languages or platforms where the weakness is unlikely to occur, as well as software development or deployment practices that will reduce the likelihood of exploit in environments where the weakness is not automatically prevented by the language or platform used.

In addition to universal weaknesses, such as buffer overflow and its related dangers, there are weakness that are more application-specific, depending on the nature of the application. For example, appropriate use of authentication and authorization might not be relevant for an application that is not accessible outside a physically secure location. CWE-284, Improper Access Control, addresses this particular application-specific area of weakness (Table 2 on next page).

Table 2: CWE-284.

|

(Click graphic to zoom)

As with buffer overflow, there is a set of other CWE entries that focus on specific elements of this general area of weakness. A search on the CWE website for “authentication and authorization” produces a list of examples.

Although such application-specific CWEs might not be relevant to all systems, the proportion of systems with external connections is growing every day. Military systems that used to be only accessible to the pilot, for example, are now being networked to improve coordination and situational awareness. Even though the other systems in the network might themselves be secure, the possibility of interference with communication is always there. Even these purely computer-to-computer connections may require authentication and authorization, to avoid spoofing or corruption by unfriendly parties. The bottom line is that a growing proportion of the formerly application-specific CWEs are becoming more universal in their relevance.

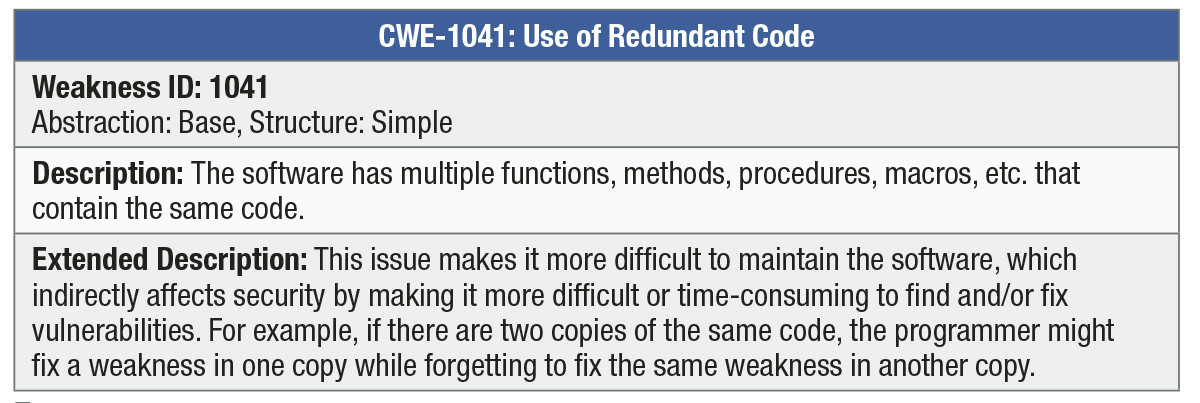

As mentioned above, a new group of CWE entries, just added in CWE version 3.2, is not as directly related to security, but is still relevant to the construction and evolution of robust mission-critical embedded systems. This group, derived from an experimental Common Quality Enumeration (CQE) built several years ago, includes CWE entries such as CWE-1041, Use of Redundant Code (Table 3).

Table 3: CWE-1041.

|

|

|

|

(Click graphic to zoom) |

As indicated in the table’s Extended Description, duplicate copies of the same code can seriously affect maintenance and evolution of a system. This is also a universal quality and maintainability issue, and in fact does occur in almost every system, independent of programming language or development process. There is even a three-letter-acronym devoted to this issue: DRY – Don’t Repeat Yourself! The duplication of code is in some ways the flip side of “Don’t reinvent the wheel.” Neither repeating yourself nor reinventing the wheel is the right answer for programmers building long-lived mission-critical systems. The key is abstraction, modularity, and a good library system underlying the programming platform, so reusable components can be abstracted into components that can be called or instantiated multiple times, without falling back on copy-and-paste.

CWE-compatible tools

As evidenced by the above small sample of CWE entries, the CWE relates directly to the kinds of challenges faced daily during the development of software-intensive systems. With this common vocabulary, it is now possible for a software project to identify and evaluate tools based on whether they can detect, or even suggest corrections for, instances of such weaknesses in the source code of the systems being developed or deployed. In fact, MITRE has established a registry of tools that follow the CWE nomenclature, both in terms of controlling their operation, and in terms of the reports they generate. This list of tools is maintained as part of MITRE’s CWE Compatibility and Effectiveness Program, and may be found on the web at https://cwe.mitre.org/compatible/compatible.html.

Currently there are about 35 companies with more than 50 tools listed in the registry of CWE-compatible tools. To be listed in the registry, the tool must satisfy at least the first four of these six criteria: CWE-searchable using CWE identifiers; must include, or allow users to obtain, associated CWE identifiers; must accurately link to the appropriate CWE identifiers; must describe CWE, CWE compatibility, and CWE-related functionality; must explicitly list the CWE-IDs that the capability claims coverage and effectiveness against locating in software; and must show test results of assessing software for the CWEs on the website.

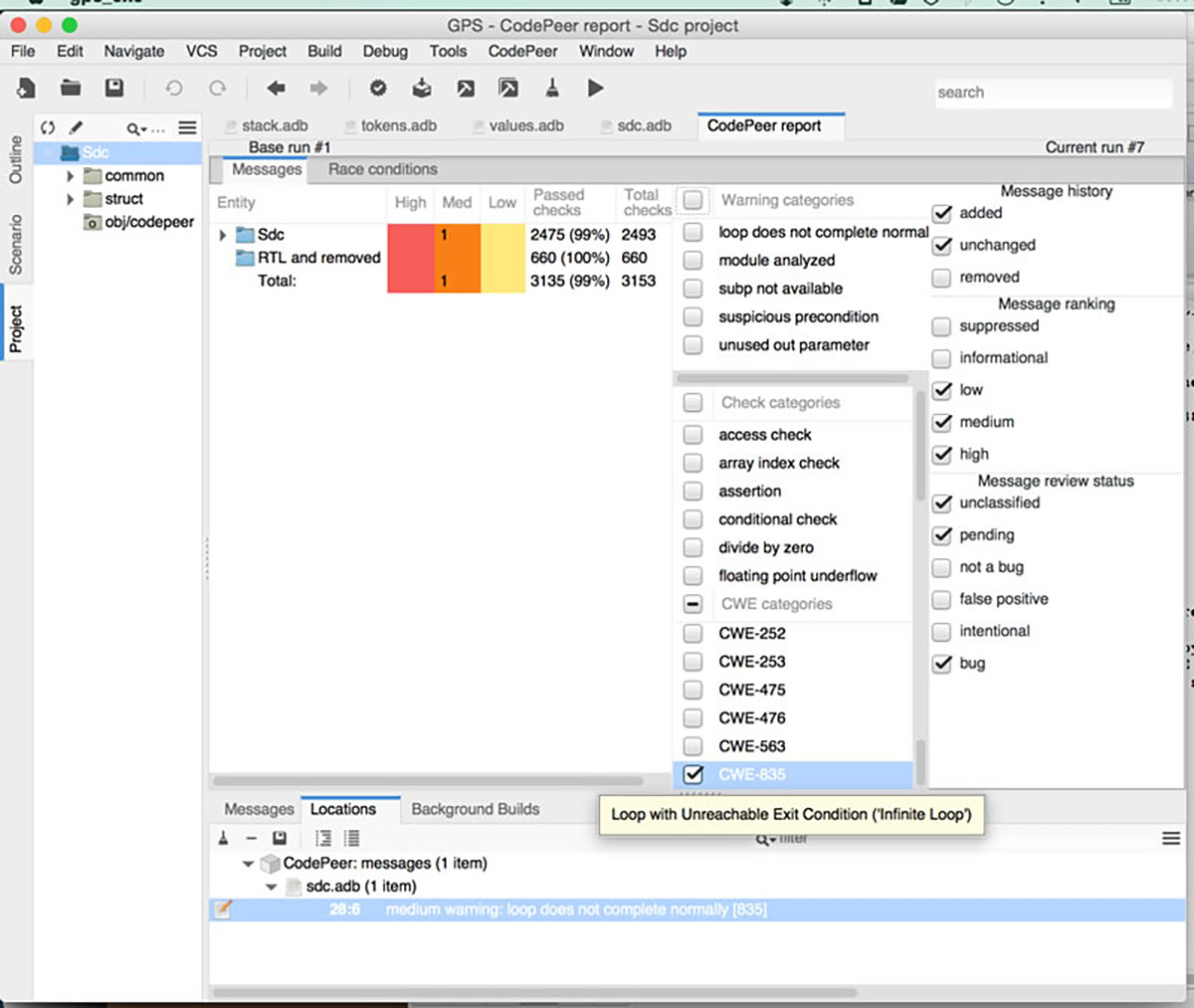

As an example of how certain kinds of tools integrate CWE identifiers into their user interface, here is a screen shot from a static-analysis tool, demonstrating its ability to detect various CWE issues in a sample program [dining_philosophers.adb – based on the classic Dining Philosophers algorithm] (Figure 1.)

Figure 1: Screen shot demonstrating the tool’s ability to detect CWE issues in a sample program.

|

|

|

|

(Click graphic to zoom) |

As shown above, each message displayed includes the CWE identifier(s) associated with each identified problem (we are seeing both buffer overflows and numeric range overflows in this case). The graphical interface also enables the user to identify, by CWE identifier, the particular weaknesses that are of current interest, thereby filtering out messages that do not relate to these issues. Although not shown here, hovering over the CWE identifier displays the short description of the weakness, so the user need not try to memorize the meaning of each CWE Id.

As another example, here is a screen shot from a tool that supports formal proof of program properties for programs written in the SPARK subset of Ada. (Figure 2.)

Figure 2: Screen shot shows the appropriate CWE identifier.

|

(Click graphic to zoom)

Here again, we see that the messages indicating places where the tool was not able to fully automate the proof of the absence-of-run-time-error (AoRTE) property include the appropriate CWE identifier. Also shown above is a search capability that can filter out non-CWE messages (in this case, by writing “CWE” in the search box), or can identify messages that refer to a specific CWE Id.

The definition of the Common Weakness Enumeration by MITRE has made an important contribution to the overall process of developing more secure and robust software-intensive systems. It provides a common vocabulary that helps internal communications within software development organizations, as well as allowing users to understand and compare the capabilities of tools designed for scanning and analyzing the source code for mission-critical software. Designers will find that including CWE-compatible features into static-analysis and formal method toolsets enables users to readily understand the kinds of security and robustness issues that can be eliminated.

S. Tucker Taft is VP and director of language research at AdaCore and is senior advisor for AdaCore’s “QGen” Model-Based Development tool suite. Tucker led the Ada 9X language design team, culminating in the February 1995 approval of Ada 95 as the first ISO standardized object-oriented programming language. His specialties include programming language design, advanced static-analysis tools, formal methods, real-time systems, parallel programming, and model-based development. Tucker is a member of the ISO Rapporteur Group that developed Ada 2005 and Ada 2012. Tucker has also been designing and implementing a parallel programming language called “ParaSail,” and is working on defining parallel programming extensions for Ada as part of the forthcoming Ada 2020 standard.

AdaCore

www.adacore.com